Prostate cancer diagnoses currently rely on time-intensive methodologies. Most biopsies are collected in an office and then sent off to a lab for processing before stained slides finally land on a pathologist’s desk for analysis. These workflows can take several days, causing critical decisions regarding treatment to be delayed.

If pathologic analysis of prostate tissue could happen in real time, clinical decisions could be made within a matter of minutes, potentially improving oncologic outcomes.

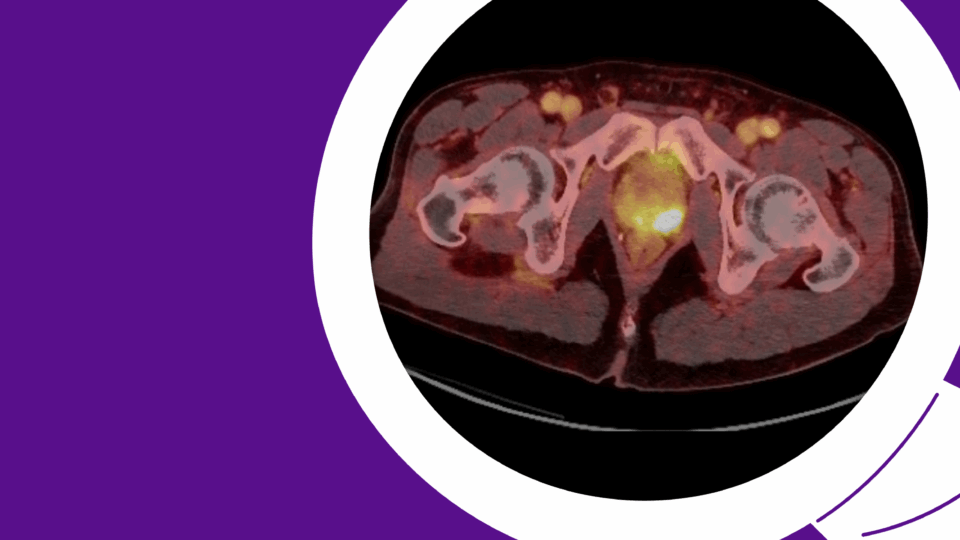

A team at NYU Langone Health and its Perlmutter Cancer Center is working to make this a reality. Their new research reveals that combining stimulated Raman histology (SRH)—a breakthrough in optics that allows for the imaging of fresh tissue—with artificial intelligence (AI) could allow clinicians to accurately detect prostate cancer in just under four minutes—without the need for pathological processing and interpretation.

The effort is being led by urologic oncologist Samir Taneja, MD, and AI expert Daniel A. Orringer, MD. Dr. Orringer, a neurosurgeon at NYU Langone’s Brain and Spine Tumor Center, is also pioneering the methodology for use in brain tumor imaging and diagnosis, including the molecular classification of glioma.

“The possible applications are numerous.”

Samir Taneja, MD

“This approach marks an important step forward in prostate cancer diagnostics,” Dr. Taneja says. “The possible applications are numerous.”

Pairing SRH and AI

Dr. Taneja and his team, including former fellow Miles Mannas, MD, now at the Vancouver Prostate Center, hypothesized that an AI convolutional neural network (CNN), in combination with SRH, could address the existing challenges with real-time pathologic analysis of prostate tissues, including lengthy processing times and a shortage of pathologists with specialized training.

In the recent study, the researchers developed a workflow for SRH CNN model development and testing, training the model on 303 prostate biopsies from 100 participants. The performance of the AI was then evaluated on an independent 113-biopsy test set. A previous report from the team confirmed that SRH images allow for the accurate identification of prostate cancer by pathologists.

Remarkably, prostate biopsy images obtained through SRH could be generated within 2 to 2.75 minutes, and the AI system achieved a rapid classification of biopsies with cancer, with a potential identification time of 1 to 2.5 minutes.

Moreover, the system demonstrated an overall accuracy of 96.5 percent in detecting prostate cancer, with a sensitivity of 96.3 percent and a specificity of 96.6 percent. The CNN’s accuracy matched that of four genitourinary pathologists interpreting the SRH images.

Broad Applicability

Future studies will focus on the ability of the SRH CNN model to inform prostate cancer grading, an area not addressed in the current work. With ongoing validation studies and collaborations, Dr. Taneja expects the system to have broad applicability in prostate cancer settings.

For example, the approach could improve biopsy targeting and reduce the number of biopsies required to establish a diagnosis. The technology could also be applied to intraoperative margin assessment for both radical prostatectomy and partial gland cryoablation. “We’ve already started testing the system in cryosurgery,” says Dr. Taneja.

“In these settings,” the authors write, “the identification of prostate cancer adjacent to treatment margin may guide further treatment, making the identification of prostate cancer within one minute invaluable.”